The Token Trap: Why Your Favorite AI Coding Tool Wants You to Stay Hungry (But Not Too Hungry)

You know that moment when you’re crushing code in Cursor at 2 AM, and suddenly everything... stops?

Not a crash. Not a bug. Just a polite little message: “You’ve reached your monthly limit.”

And you sit there, staring at half-written code, a deadline looming, thinking: I’m literally paying $20/month. How did I run out?

Welcome to the most fascinating tightrope walk in tech: the companies closest to you—the ones building the tools you actually use—are trapped in an economic vise they can’t escape.

And you’re the squeeze.

The Goldilocks Problem: Not Too Hot, Not Too Cold

Let’s talk about Cursor, GitHub Copilot, Cody, Replit—any AI coding assistant you’ve fallen in love with.

These companies face an impossible balancing act:

Give you too little: You churn. You rage-quit to a competitor. You post angry tweets. You tell your friends not to bother.

Give you too much: They go bankrupt. Every keystroke you make costs them real money, and if you’re a power user, they’re losing money on you.

The sweet spot? Keep you just satisfied enough to stay, but just constrained enough to make them money.

It’s like Goldilocks, except the bears are shareholders and the porridge is token budgets.

The Math That Doesn’t Math

Here’s what you think you’re buying when you pay $20/month for Cursor Pro:

“I get smart code completions, AI chat, and debugging help. Cool.”

Here’s what you’re actually buying:

Monthly subscription: $20

Your actual usage: 500 completions + 100 chat sessions

Token consumption:

├── 500 code completions × 2,000 tokens = 1M tokens

├── 100 chat sessions × 3,000 tokens = 300K tokens

└── Total: 1.3M tokens

Backend API costs (at market rates):

├── Input: 910K tokens × $5/M = $4.55

├── Output: 390K tokens × $15/M = $5.85

└── Total cost to Cursor: $10.40

Cursor’s margin on you: $9.60 (48%)That’s the average user. Not terrible margins.

Now meet Sarah, the power user:

Sarah’s usage: 2,000 completions + 500 chat sessions

Token consumption:

├── 2,000 completions × 2,500 tokens = 5M tokens

├── 500 chat sessions × 4,000 tokens = 2M tokens

└── Total: 7M tokens

Backend costs: $47.50

Cursor’s revenue from Sarah: $20

Cursor’s margin on Sarah: -$27.50 (LOSS)Sarah is losing them money. Every. Single. Month.

So what does Cursor do? They institute rate limits.

“500 fast requests per month, then you get throttled.”

And Sarah hits that limit on day 12.

The Three Paths Forward (All of Them Suck)

When Sarah hits her limit, Cursor has three options:

Option 1: Let Her Keep Going (Bankruptcy Plan)

If they let every power user consume unlimited tokens, they’d be out of business in six months. The economics simply don’t work.

The backend providers aren’t giving discounts that make unlimited sustainable. Cursor would need to charge $100+/month to break even on power users—but that kills their competitive positioning.

Option 2: Hard Limit Her (Churn Guarantee)

Cap her at 500 requests. When she hits it, she’s done for the month.

Sarah gets frustrated and churns to GitHub Copilot. Copilot has different limits but the same fundamental problem. Eventually, she bounces between tools, never quite happy anywhere.

Cursor loses a customer. Their competitor doesn’t really gain one—they inherit the same loss-making user.

Option 3: Upsell Her to “Pro Plus Mega Ultra” ($50/month)

Give Sarah more tokens for more money. Maybe 2,000 fast requests instead of 500.

This is the path most tools choose. It delays the problem but doesn’t solve it. Sarah now costs them:

Sarah’s new usage: 4,000 completions + 1,000 chats

Backend cost: $95

Revenue: $50

Margin: -$45 (EVEN BIGGER LOSS)The only way to make money on Sarah is to charge her $100-150/month.

But Sarah works at a startup. Her boss won’t approve $150/month for a code editor. She’s not paying it herself.

So Cursor loses the upsell or loses more money on the upsell. Catch-22.

The Secret Fourth Option: The Squeeze

What actually happens is more subtle. These tools implement soft friction:

Technique 1: The Slowdown

First 500 requests: Lightning fast

Next 500 requests: “Processing...” (3 seconds)

After 1,000: “Processing...” (8 seconds)

You don’t technically hit a limit, but using the tool becomes annoying enough that you self-limit

Technique 2: The Downgrade

First 500: GPT-4o (best model)

Next 500: GPT-4o-mini (cheaper model)

After 1,000: GPT-3.5 (cheapest model)

Quality degrades, you use it less, costs go down

Technique 3: The Feature Gate

Unlimited autocomplete: Always available (cheap)

AI chat: 100 sessions/month (expensive)

Claude for coding: 50 sessions/month (most expensive)

Multi-file edits: 25 sessions/month (insanely expensive)

The most valuable features get the tightest limits. You can use the tool “unlimited,” but the parts that actually solve hard problems? Those are metered.

Brilliant. Profitable. Maddening.

The Churn Dance: Nobody’s Happy, Everyone’s Stuck

Here’s the dirty secret: every AI tool has the same problem.

Cursor, Copilot, Cody, Replit, Tabnine—they’re all stuck between the same rock and hard place:

Backend token costs are high and non-negotiable

Users expect unlimited or near-unlimited usage

Competitive pressure keeps prices around $10-30/month

Unit economics are underwater for heavy users

So users do the churn dance:

Month 1: Sign up for Cursor. Love it. Use it constantly.

Month 2: Hit rate limits. Frustrated. Search for alternatives.

Month 3: Switch to GitHub Copilot. Fresh limits! Use it constantly.

Month 4: Hit rate limits. Back to Cursor? They’ve “upgraded” their limits!

Month 5: Realize all tools are playing the same game.

Month 6: Bitter acceptance. Pick one. Live with limits.

Nobody wins:

Users are frustrated

Tools face constant churn (20-40% annual)

Customer acquisition costs ($50-150) barely get recovered

Net Promoter Scores are middling despite amazing tech

The Great Benefit: Data, Positioning, and the Land Grab

So why do these companies even exist? If the economics are so broken, why not just use the raw APIs directly?

Because they’re not selling tokens. They’re selling convenience + UX + positioning for the future.

Benefit 1: The Data Moat

Every completion you accept, every chat message you send, every bug you fix—Cursor is watching.

Not in a creepy way (probably). But they’re learning:

What code patterns you prefer

What questions you ask

What completions you accept vs. reject

What makes you more productive

This data is gold. It trains their models on real-world usage. It helps them optimize which backend model to route requests to. It shows them what features to build next.

The raw APIs don’t give them this. Cursor’s value isn’t the AI—it’s the integration layer that learns how developers actually work.

Benefit 2: Brand Position in an AI-First World

Cursor isn’t trying to make money on $20/month subscriptions. They’re trying to become the default coding environment for the AI age.

Think about it:

Netscape lost browser wars to IE and Chrome

Sublime Text lost editor wars to VS Code

VS Code might lose to... Cursor?

The end game isn’t subscription revenue. It’s:

Acquisition: Get bought for $1-5B by Microsoft, Google, or someone desperate for distribution

Enterprise: Once you own the developer workflow, sell $50-100/seat/month to companies

Platform: Become the place where AI coding happens, then monetize the ecosystem

The $20/month consumer tier is a loss leader for a much bigger play.

Benefit 3: The Pricing Power Treadmill

Right now, tools are in a race to the bottom on price. But that won’t last forever.

As these tools become essential infrastructure—as you literally can’t be productive without them—pricing power shifts.

Think about how this evolves:

Phase 1 (Now): Aggressive land grab, race to lowest price

Cursor: $20/month

Copilot: $10/month for individuals, $19 for business

Price competition keeps margins razor-thin

Phase 2 (12-18 months): Consolidation starts

Smaller players die or get acquired

2-3 dominant tools emerge

Switching costs increase (you’ve customized, integrated, trained)

Phase 3 (2-3 years): Pricing normalization

Leaders slowly raise prices: $30, $40, $50/month

Users grumble but pay (productivity gains justify it)

Enterprise tiers hit $75-150/seat/month

Margins improve to 60-70%

Phase 4 (3-5 years): Infrastructure pricing

Tools become as essential as Slack or Figma

$100-200/seat/month for teams

Nobody questions it anymore

Finally, sustainable economics

This is the long game. Lose money now, make money later.

The Hidden Winner: The Middle Layer Gets the Margin

Here’s the twist: the companies closest to you might actually capture the most value.

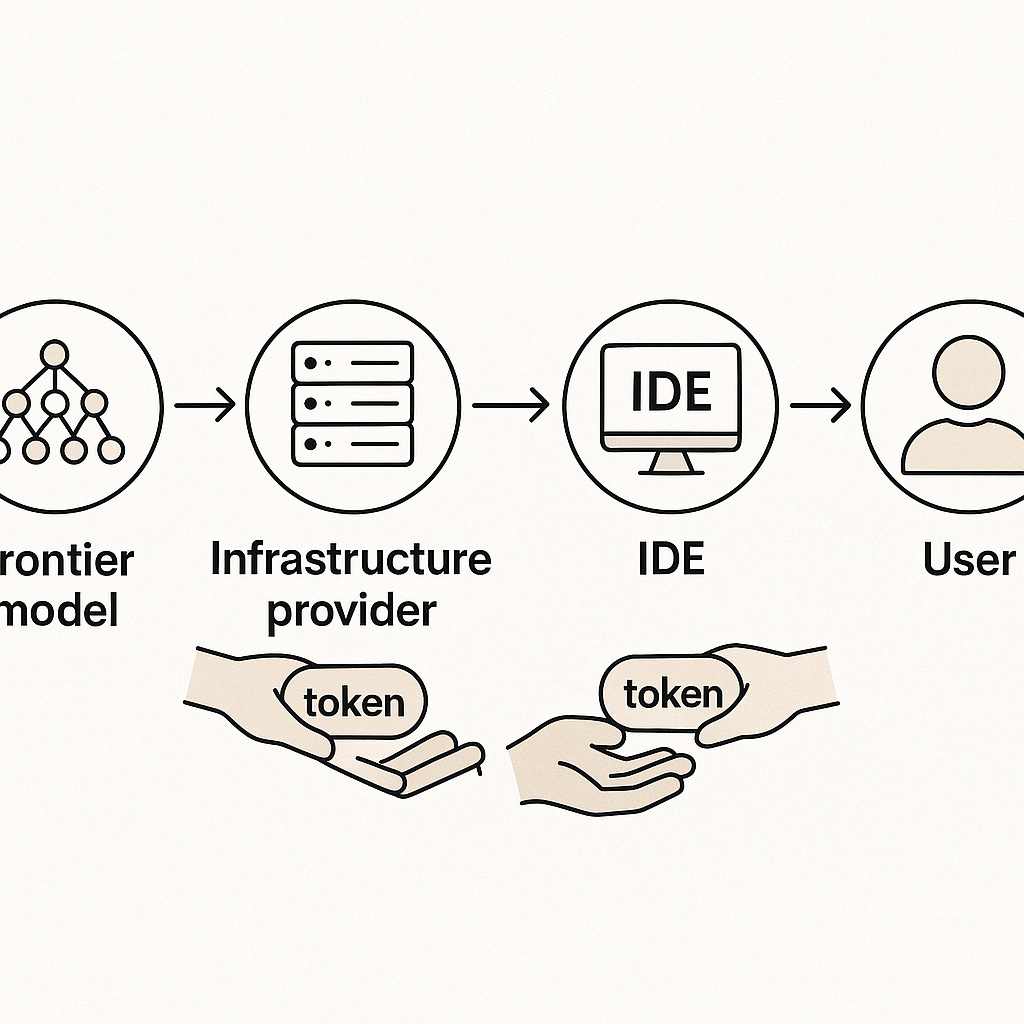

Let’s look at the value chain:

Backend Model Providers:

├── Margins: 70-90% (insane)

├── But: Commoditizing fast

└── Future: Race to zero with open source

Middleware/Tools (Cursor, Copilot):

├── Margins: -10% to 30% (brutal now)

├── But: Creating lock-in through UX

└── Future: 60-70% margins once consolidated

End Users:

├── Cost: $20-50/month now

├── Value: 10-50x productivity gains

└── Future: $100-200/month, still worth itThe middle layer—the consumer-facing tools—look like they’re getting squeezed. And they are!

But they’re also building moats:

Data feedback loops: Better completions from your usage patterns

Workflow integration: Hooks into your git, terminal, debugging

Team collaboration: Shared context, codebases, preferences

Switching costs: Muscle memory, custom shortcuts, projects

The backend providers are fungible. You can swap models easily.

But switching from Cursor to another editor? That’s painful. You’ve customized everything. Your team uses it. You’ve got 37 keyboard shortcuts memorized.

That pain = pricing power = future margin.

The Feature Treadmill: Why Everything Costs More Tomorrow

Remember when code completion was the whole product? Pepperidge Farm remembers.

Now every tool is in an arms race to add features:

Six Months Ago:

Code completion: 500 tokens per completion

Monthly usage: 500 completions = 250K tokens

Cost: Manageable

Today:

Code completion: Still 500 tokens

AI chat: 3,000 tokens per session

Multi-file edits: 15,000 tokens per session

Agent mode (autonomous coding): 50,000+ tokens per session

Monthly usage: 300 completions + 50 chats + 20 multi-file + 10 agent sessions

Total tokens: 1.15M tokens

Cost: 4.6x higher

Six Months From Now:

Everything above, plus:

Autonomous debugging: 100,000 tokens per session

Codebase-wide refactoring: 200,000 tokens per session

AI pair programming (constant context): 500,000 tokens per session

Total tokens: 5M+ tokens

Cost: 20x higher than a year ago

Each new feature makes the product more valuable. But each new feature multiplies token consumption.

The tool gets better, but the economics get worse.

Which means:

Limits get tighter

Tiers get more expensive

Power users get squeezed harder

It’s not greed. It’s just math.

The Great Irony: You Want What They Can’t Afford to Give You

Here’s the fundamental tension:

You want:

Unlimited AI assistance

Always the best model

Instant responses

No throttling

$10-20/month

They want to give you that. They really do. Imagine the reviews:

“Cursor is UNLIMITED! I use it 24/7 and never hit a limit! 5 stars!”

That’s the dream. Happy customers. Viral growth. Market dominance.

Except the backend costs make it impossible. The unit economics literally don’t work.

So instead, you get:

Metered AI assistance

Model mixing (cheap when possible, expensive when necessary)

“Processing...” delays

Soft throttling

$20-50/month (and climbing)

Neither side is wrong. You’re not entitled for wanting more. They’re not greedy for limiting you.

It’s just... the economics don’t align.

You experience AI as magic. Zero marginal cost, infinite scale, pure intelligence.

They experience AI as manufacturing. Every completion has a real cost. Every chat session eats into margin. Every power user is a potential loss.

The magic is real. But it’s not free. And that mismatch creates all the friction.

The Survival Strategies: How Tools Stay Alive

The tools that survive this squeeze will use some combination of:

Strategy 1: Model Arbitrage

Route every request to the cheapest model that can handle it:

Simple completions: GPT-4o-mini ($0.15/M)

Complex code: GPT-4o ($5/M)

Reasoning needed: o1-mini ($3/M)

Multi-file: Claude Sonnet ($3/M)

Savings: 60-80% on backend costs by smart routing.

Strategy 2: Aggressive Caching

Cache system prompts, codebases, project context:

First request: 10,000 tokens

Cached subsequent: 1,000 tokens (90% discount)

Savings: 50-70% for returning users.

Strategy 3: The Freemium Extreme

Make the free tier SO limited it’s basically a demo:

50 completions/month

No chat

No advanced features

Throttled responses

Then make Pro tier ($20) feel like a steal by comparison.

Conversion rate: 5-10% of free users, but those who convert stay longer.

Strategy 4: The Enterprise Bet

Stop focusing on consumers. Go all-in on teams:

$50-100/seat/month

Volume discounts at 20+ seats

Contracts lock in revenue

Upsell admin features, analytics, compliance

This is GitHub Copilot’s strategy. Consumer tier is a loss leader for enterprise sales.

Strategy 5: The Vertical Focus

Don’t try to be everything to everyone. Own a niche:

Replit: Education and beginners

Cursor: Professional developers

Amazon Q: AWS ecosystem

Codium: Testing specialists

Narrower focus = better unit economics = sustainable margins.

Strategy 6: The Hail Mary (Own the Model)

Build your own models. Cut out the backend provider markup:

Training cost: $50-200M (insane)

Inference cost: $0.50-1.50/M tokens (vs. $5-15/M buying)

Risk: Enormous. Reward: Potentially huge.

This is the Replit strategy. They’re training their own code models to escape the provider squeeze.

What This Means for You (The Actually Useful Part)

You’re not powerless here. Understanding the economics changes how you engage:

1. Abuse the Free Tiers (Ethically)

Most tools give you some free usage. Use it! Jump between tools:

Cursor free: 50 requests

Copilot free trial: 30 days

Cody free: 100 requests

Replit free: Limited but usable

Rotate through them. Reset monthly. Stay in free tiers longer.

Is this “gaming the system”? Sure. But the system is designed to hook you with a free taste anyway. Play the game.

2. Be Strategic About When You Use Premium Features

Save AI chat and multi-file edits for when you really need them:

Debugging a gnarly issue: Use it

Refactoring core architecture: Use it

Writing a simple function: Type it yourself

You’ll hit limits less often and get more value per token.

3. Optimize Your Prompts

The more context you dump into chat, the more tokens you consume:

Bad: Paste entire file + question (3,000 tokens)

Good: Paste specific function + question (500 tokens)

6x difference in token usage for the same answer.

4. Vote with Your Churn

These companies are terrified of churn. If you cancel and tell them why, they listen.

“I hit my rate limit on day 10 every month. I need 2x the current limit to stay.”

They track this feedback. If enough users say it, they adjust (slightly).

5. Wait for Consolidation

Right now, pricing is in chaos. Tools are undercutting each other, all losing money on power users.

In 12-24 months, the market will consolidate. 2-3 tools will dominate. Pricing will stabilize (higher).

If you can wait, the mature market will have better clarity on sustainable pricing. Less churn. More certainty.

6. Consider the Direct API Play

If you’re technical enough, using raw APIs directly might be cheaper:

Build your own editor integration

Use model aggregators (OpenRouter, Together.ai)

Pay only for what you use

Downsides: No UX, no support, more work. Upsides: 50-80% cost savings if you’re a power user.

It’s DIY, but it’s honest.

The Endgame: Where This All Goes

Five years from now, one of three things happens:

Scenario 1: The SaaS Normalization

AI coding tools become like Slack or Figma:

$100-150/seat/month for teams

Consumers pay $30-50/month

Features are robust, limits are generous

Companies are profitable

Everyone grumbles about the cost but pays it

This is the most likely outcome. We accept higher prices because the value is undeniable.

Scenario 2: The Open Source Takeover

Open source models catch up:

Local inference becomes viable (on-device AI)

Self-hosted solutions dominate

Consumer tools collapse or pivot to enterprise

Only companies with proprietary data survive

This is the optimistic hacker dream. Less likely but possible.

Scenario 3: The Vertical Fragmentation

The market splits by use case:

Different tools for different languages

Different tools for different industries

Different tools for different skill levels

Niche winners everywhere, no dominant player

This is the messy middle. Possible but chaotic.

My bet? Scenario 1, with shades of 3.

Prices go up, features get better, a few tools dominate but others survive in niches.

And you’ll complain about paying $50/month for Cursor.

But you’ll pay it. Because you’ve tried coding without AI, and you’re never going back.

The Real Lesson: The Squeeze Is the System

The companies closest to you—the ones building the tools you love—aren’t screwing you over.

They’re trapped in an economic vice:

Backend costs are high and rising (new features = more tokens)

Competitive pressure keeps prices low

Users expect unlimited or near-unlimited usage

Unit economics are underwater for heavy users

So they squeeze. They throttle. They tier. They limit.

Not because they’re greedy. Because they’re trying to survive.

And you, the user, feel the squeeze:

Rate limits when you’re in flow state

Quality downgrades when you most need help

Upsell prompts when you hit walls

It’s frustrating. It’s by design. And it’s not going away.

Because the economics of AI—at least for now—don’t support the “unlimited everything” dream we all want.

The tools closest to you are stuck between your expectations and reality’s constraints.

They’re trying to give you as much as they can without going bankrupt.

And honestly? They’re doing a pretty impressive job at threading that needle.

Even if it does mean your code editor now has a monthly budget.

The Token Trap isn’t a bug. It’s not even really a trap.

It’s just the economics of selling magic at scale.

And we’re all learning what that costs.

This is fascinating blogpost!

You are absolutely nailing it with your analysis.