The Context Paradox: Why More Memory Doesn't Make Smarter AI

Your brain doesn't remember what you had for lunch three Tuesdays ago, and that's exactly why you're intelligent.

While AI researchers chase ever-longer context windows—now stretching to millions of tokens—they're missing a fundamental insight from neuroscience. The human neocortex (as a complementary learning system) doesn't achieve intelligence by storing every detail of experience. Instead, it ruthlessly compresses, abstracts, and generalizes. It forgets the irrelevant and crystallizes the essential.

Modern AI systems are doing the opposite. They're becoming digital hoarders, cramming everything into context and expecting magic to emerge from the mess. The results aren't pretty.

When Memory Becomes Poison

Context Poisoning occurs when errors embed themselves in the expanding context, becoming persistent sources of confusion.

Initial State: After Poisoning: Persistent Error:

┌─────────┐ ┌─────────┐ ┌─────────┐

│ Goal: A │ │ Goal: A │ │ Goal: X │ ← Wrong!

├─────────┤ → ├─────────┤ → ├─────────┤

│ Plan: B │ │ Plan: B │ │ Plan: Y │ ← Based on X

├─────────┤ ├─────────┤ ├─────────┤

│ Facts │ │ Facts │ │ Facts │

└─────────┘ │ ERROR! ←│ │ ERROR! ←│

└─────────┘ └─────────┘

↑ ↑

Hallucination enters Error propagates

Google's Gemini team documented this perfectly: their Pokémon-playing agent would hallucinate game states, then spend hundreds of turns chasing impossible goals based on that false information. The error became a persistent delusion, referenced and reinforced with each subsequent action.

Your brain has a solution for this: sleep. During slow-wave sleep, the hippocampus replays experiences to the neocortex, which decides what to keep and what to discard. Errors get filtered out; patterns get preserved.

The Distraction Trap

Context Distraction happens when accumulated history overwhelms the model's ability to think clearly.

Training Knowledge: vs. Overwhelming Context:

┌──────────────┐ ┌─────────────────┐

│ Patterns │ │ Action 1: ... │

│ Strategies │ │ Action 2: ... │

│ Principles │ ←─ Should guide │ Action 3: ... │

│ Concepts │ decisions │ ... │

└──────────────┘ │ Action 847: ... │ ← Fixated

│ Action 848: ... │ on this

↑ │ Action 849: ... │

Gets drowned out └─────────────────┘

Databricks found that even powerful models like Llama 3.1 405B start degrading around 32k tokens. The Gemini agent, with access to over 100k tokens, began compulsively repeating past actions instead of synthesizing new strategies.

This mirrors a fascinating aspect of human memory. We don't recall every step of learning to drive—we extract the essential patterns and discard the details. If we couldn't forget our first clumsy attempts, we'd never improve.

When Everything Becomes Nothing

Context Confusion emerges when irrelevant information pollutes decision-making.

Relevant Tools: Irrelevant Tools:

┌─────────────┐ ┌─────────────────┐

│ Calculator │ ← Needed │ Weather API │

│ Database │ for task │ Music Player │

└─────────────┘ │ File Converter │

│ Email Client │ ← Model pays

│ Photo Editor │ attention to

│ ...45 more... │ ALL of these

└─────────────────┘

↓

Wrong tool selection

The Berkeley Function-Calling Leaderboard reveals this clearly: every model performs worse when given multiple tools, even when only one is relevant. Smaller models fail catastrophically—a quantized Llama 3.1 8B couldn't handle 46 tools but succeeded with just 19.

The neocortex faces a similar challenge with sensory input. You're not consciously processing every photon hitting your retina or every sound wave reaching your ears. Attention mechanisms filter ruthlessly, allowing higher-order reasoning to focus on what matters.

The Contradiction Crisis

Context Clash occurs when accumulated information contains internal contradictions.

Turn 1: "The answer is probably X"

Turn 2: New info suggests "Actually, it might be Y"

Turn 3: More data indicates "Definitely Z"

Final Context:

┌─────────────────────────────────────┐

│ Early attempt: "Answer is X" │ ← Still present

│ Revised thinking: "Maybe Y" │ ← Conflicts

│ New evidence: "Actually Z" │ ← Current

│ Additional context... │

└─────────────────────────────────────┘

↓

Model gets confused by its own

earlier, incomplete reasoning

Microsoft and Salesforce demonstrated this beautifully. They took benchmark problems and split them across multiple conversation turns, mimicking real-world agent interactions. Performance collapsed by an average of 39%. Even GPT-4's successor dropped from 98.1% to 64.1% accuracy.

The problem isn't the information itself—it's that the model's early, incomplete reasoning remains in context, creating interference with later, more informed analysis.

Lost in the Middle

Positional Bias reveals that context windows aren't uniformly accessible—models systematically ignore the middle.

Context Window:

┌─────────────────────────────────────────┐

│ ████████ Beginning ████████ │ ← High attention

│ │

│ ░░░░░░░░░ Middle ░░░░░░░░░░░░░ │ ← Ignored!

│ │

│ ████████ End ████████ │ ← High attention

└─────────────────────────────────────────┘

Stanford researchers discovered that language models perform best when relevant information occurs at the beginning or end of input context, with performance significantly degrading when models must access information in the middle of long contexts. This "lost in the middle" problem challenges most LLMs, referring to the dramatic decline in accuracy when correct information is located in the middle.

Even with context lengths of only 2,000 tokens, models fail to find simple sentences embedded in the middle, despite having access to far more context than needed. Your neocortex doesn't suffer from this limitation—it can access memories regardless of when they were formed.

The Know-But-Don't-Tell Phenomenon

Internal-External Disconnection occurs when models encode relevant information but fail to use it in responses.

Internal Representations: vs. Generated Output:

┌─────────────────────┐ ┌─────────────────┐

│ Layer 12: ████████ │ ← Knows answer │ "I don't know" │

│ Layer 18: ████████ │ ← Identifies pos │ │

│ Layer 24: ████████ │ ← High confidence │ Wrong answer │

└─────────────────────┘ └─────────────────┘

Researchers found that while LLMs can accurately identify the position of crucial information within context, they often fail to utilize this information effectively in their responses, leading to what they term the 'know but don't tell' phenomenon. The models encode the position of target information but often fail to leverage this in generating accurate responses, revealing a disconnect between information retrieval and utilization.

This suggests that the bottleneck isn't storage or recognition—it's the translation from internal knowledge to external expression. The human brain faces no such disconnect; what we know, we can generally access and communicate.

Context Overflow

Computational Explosion makes longer contexts prohibitively expensive, creating practical limits.

Context Length: Memory Required: Processing Time:

1x → 1x → 1x

2x → 4x → 4x

4x → 16x → 16x

8x → 64x → 64x

When a text sequence doubles in length, an LLM requires four times as much memory and compute to process it. This quadratic scaling rule limits LLMs to shorter sequences during training and, effectively, shorter context windows during inferencing. The KV cache should be stored in memory during decoding time; for a batch size of 512 and context length of 2048, the KV cache totals 3TB, that is 3x the model size.

Human memory doesn't scale quadratically. We can hold conversations for hours without exponentially increasing mental effort—our biological attention mechanisms are fundamentally more efficient.

Reasoning Degradation

Performance Decay occurs even when models operate well below their theoretical limits.

Advertised Capacity: vs. Actual Performance:

┌─────────────────┐ ┌─────────────────┐

│ 1M+ tokens │ │ Peak: ~4k tokens│

│ │ │ ████████ │

│ │ → │ ██████ │

│ │ │ ████ │

│ Theoretical │ │ ██ │

└─────────────────┘ └─────────────────┘

Rapid decline

As the input length increases, there is a notable decline in reasoning capabilities, even when operating well below the maximum context limits of current models. Models uniformly dip in performance as tasks become more complex, with some models like Qwen and Mistral degrading linearly with respect to input length. LLMs quickly degrade in their reasoning capabilities, even on input length of 3000 tokens, which is much shorter than their technical maximum.

This isn't just about storage—it's about maintaining coherent reasoning as information accumulates. The human brain maintains reasoning quality across extended thought processes, suggesting fundamentally different information processing mechanisms.

The Neuroscience of Intelligence

Your brain solves these problems through hierarchical abstraction. The neocortex doesn't store raw experiences—it extracts patterns, builds models, and discards the rest. A master chess player doesn't remember every move from every game; they recognize patterns that transcend specific positions.

Raw Experience: Hierarchical Abstraction:

┌─────────────────┐ ┌─────────────────────────┐

│ Move 1: Pawn e4 │ │ Level 3: Strategic │

│ Move 2: Knight │ │ Principles │

│ Move 3: Castle │ → ├─────────────────────────┤

│ Move 4: Pawn │ │ Level 2: Tactical │

│ ... │ │ Patterns │

│ Move 47: Queen │ ├─────────────────────────┤

└─────────────────┘ │ Level 1: Piece Values │

└─────────────────────────┘

Raw storage Compressed wisdom

This is why human experts can operate with remarkable efficiency in their domains. They've compressed vast experience into portable principles. They've learned what to forget.

The Vendor Mirage

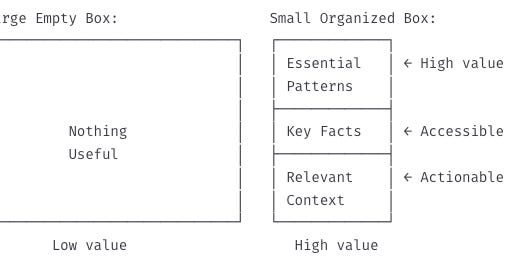

Here's the uncomfortable truth: longer context windows don't automatically translate to more value. Context is like a box—what matters isn't the size, but what you put in it and how you organize the contents.

Large Empty Box: Small Organized Box:

┌─────────────────────────────┐ ┌─────────────┐

│ │ │ Essential │ ← High value

│ │ │ Patterns │

│ │ ├─────────────┤

│ Nothing │ │ Key Facts │ ← Accessible

│ Useful │ ├─────────────┤

│ │ │ Relevant │ ← Actionable

│ │ │ Context │

└─────────────────────────────┘ └─────────────┘

Low value High value

Yet the AI industry is rushing to sell you bigger boxes. Vendors pitch "memory as a service" and "cognition as a service"—Turing-complete abstractions with knowledge graphs you can supposedly bolt onto your infrastructure like adding RAM to a server. They promise that their mechanistic memory systems will guide effective behavior through pure storage and retrieval.

If someone pitches you memory or cognition as a service, ask them this: Do you understand the complexity of human cognition? Do you grasp how the neocortex performs hierarchical abstraction? Can you explain why forgetting is essential to intelligence?

The answers will tell you everything you need to know about whether they're solving the right problem or just selling you a bigger box.

Real cognitive architectures aren't about expanding storage—they're about intelligent curation. They don't memorize everything; they learn what to ignore. They don't store raw data; they extract meaning. They don't accumulate context; they distill wisdom.

The Path Forward

The race for longer context windows assumes that more memory equals more intelligence. But intelligence isn't about storage capacity—it's about selective attention, hierarchical abstraction, and graceful forgetting.

The most promising AI systems will likely follow the brain's lead: aggressive summarization, dynamic tool loading, and context quarantining. They'll learn not just what to remember, but what to forget.

Because in the end, the art of intelligence isn't in capturing everything—it's in knowing what matters.

References

Context Failures Research:

Liu, N. F., Lin, K., Hewitt, J., Paranjape, A., Belinkov, Y., & Liang, P. (2023). Lost in the Middle: How Language Models Use Long Contexts. Transactions of the Association for Computational Linguistics. https://direct.mit.edu/tacl/article/doi/10.1162/tacl_a_00638/119630/Lost-in-the-Middle-How-Language-Models-Use-Long

Lu, T., Chen, S., Zheng, C., Wu, Q., Lin, X., & Zhou, J. (2024). Insights into LLM Long-Context Failures: When Transformers Know but Don't Tell. arXiv preprint arXiv:2406.14673. https://arxiv.org/abs/2406.14673

Hosseini, P., et al. (2024). Efficient Solutions For An Intriguing Failure of LLMs: Long Context Window Does Not Mean LLMs Can Analyze Long Sequences Flawlessly. arXiv preprint arXiv:2408.01866. https://arxiv.org/abs/2408.01866

Position Bias and Memory:

Yu, Y., et al. (2024). Mitigate Position Bias in Large Language Models via Scaling a Single Dimension. arXiv preprint arXiv:2406.02536. https://arxiv.org/abs/2406.02536

He, J., et al. (2023). Never Lost in the Middle: Mastering Long-Context Question Answering with Position-Agnostic Decompositional Training. arXiv preprint arXiv:2311.09198. https://arxiv.org/abs/2311.09198

Computational Costs:

IBM Research. (2025). Why larger LLM context windows are all the rage. https://research.ibm.com/blog/larger-context-window

Wang, B., & Gao, W. (2024). Scaling to Millions of Tokens with Efficient Long-Context LLM Training. NVIDIA Technical Blog. https://developer.nvidia.com/blog/scaling-to-millions-of-tokens-with-efficient-long-context-llm-training

Performance Degradation:

Li, H., et al. (2024). LongICLBench: Long-context LLMs Struggle with Long In-context Learning. arXiv preprint arXiv:2404.02060. https://arxiv.org/abs/2404.02060

Levy, I., et al. (2024). Same Task, More Tokens: the Impact of Input Length on the Reasoning Performance of Large Language Models. arXiv preprint arXiv:2402.14848. https://arxiv.org/abs/2402.14848

Industry Analysis:

Databricks. (2024). Long Context RAG Performance of LLMs. https://www.databricks.com/blog/long-context-rag-performance-llms

Scale AI. (2024). A Guide to Improving Long Context Instruction Following. https://scale.com/blog/long-context-instruction-following